In the early days of computing, displaying information on a screen was a significant challenge. As technology advanced, various display technologies emerged each with its unique capabilities and limitations. This blog post will explore the evolution of technology used in old PC monitors tracing their development from mechanical displays to the modern LCD and LED screens we use today.

Technologies Used in Old Computer Monitors

Mechanical displays and oscilloscope displays were earliest technologies. Teletype displays and monochrome CRT monitors were common in early PCs. Color CRT monitors with graphics modes like CGA, EGA, VGA dominated until flat panels emerged.

Mechanical Displays

One of the earliest display technologies used in computers was mechanical displays. These displays used physical components such as rotating drums or movable type to represent characters or simple graphics. While primitive by today’s standards they were an essential step in the evolution of computer displays.

Example: The IBM 826 Alphabetic Printer introduced in 1953 used a rotating drum with characters embossed on its surface to print output on paper.

Oscilloscope Displays

Another early display technology was the oscilloscope display. These displays used a cathode ray tube (CRT) to generate a beam of electrons that could be deflected horizontally and vertically to create images on a phosphor coated screen.

Example: The DEC PDP-1 computer introduced in 1959 used an oscilloscope display for its output.

Teletype Displays

Teletype displays, also known as teleprinters were widely used in the early days of computing. These devices were essentially electric typewriters that could receive and print data transmitted over a communication line.

Example: The ASR-33 Teletype, introduced by Teletype Corporation in the 1960s was a popular teletype display used with many early computer systems.

Other Old Monitor Technologies

Cathode Ray Tube (CRT) monitors were dominant from 1970s to early 2000s.Monochrome displays could only show one color usually green or amber. Electroluminescent displays (ELDs) were early flat panel tech with phosphor layer. ELDs offered thin design, low power but limited resolution and contrast.

Cathode Ray Tube (CRT) Monitors

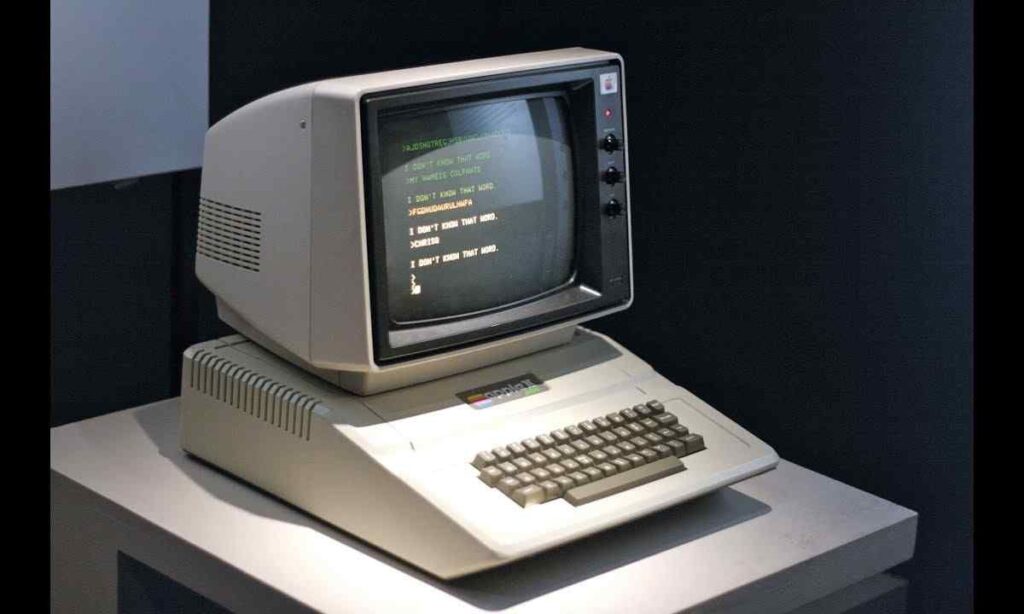

Cathode Ray Tube (CRT) monitors were the dominant display technology for personal computers from the 1970s through the early 2000s. These monitors used a vacuum tube with a electron gun that fired a beam of electrons onto a phosphor coated screen, creating an image.

CRT Monitors Advantages:

- High refresh rates (up to 85 Hz)

- Wide viewing angles

- Good color reproduction

CRT Monitors Disadvantages:

- Large and bulky

- High power consumption

- Susceptible to screen burn-in

Monochrome Displays

Early personal computers often used monochrome displays which could only display one color (usually green or amber) on a black background. These displays were inexpensive and offered good resolution for text based applications.

Example: The IBM Monochrome Display Adapter (MDA) was a popular monochrome display adapter used with early IBM compatible PCs.

Electroluminescent Displays (ELDs)

Electroluminescent displays (ELDs) were an early flat panel display technology that used a thin layer of phosphor material sandwiched between two conductive surfaces. When an electric current was applied, the phosphor would emit light, creating an image.

ELD Advantages:

- Thin and lightweight

- Low power consumption

- Wide viewing angles

ELD Disadvantages:

- Limited resolution

- Poor contrast and color reproduction

- Susceptible to image burn-in

Early IBM-Compatible PCs

Early IBM-compatible PCs used various graphics modes and display adapters each with its own capabilities and limitations. Some notable examples include:

- CGA (Color Graphics Adapter): Introduced in 1981 the CGA could display up to 16 colors (4 colors at a time) with a resolution of 320×200 or 640×200 (monochrome).

- EGA (Enhanced Graphics Adapter): Introduced in 1984 the EGA could display up to 16 colors with a resolution of 640×350 or 16 colors with a resolution of 640×200.

- VGA (Video Graphics Array): Introduced in 1987 the VGA was a significant improvement over previous graphics adapters, offering a resolution of 640×480 with 16 colors or 320×200 with 256 colors.

What Was the Old Computer Screen Technology?

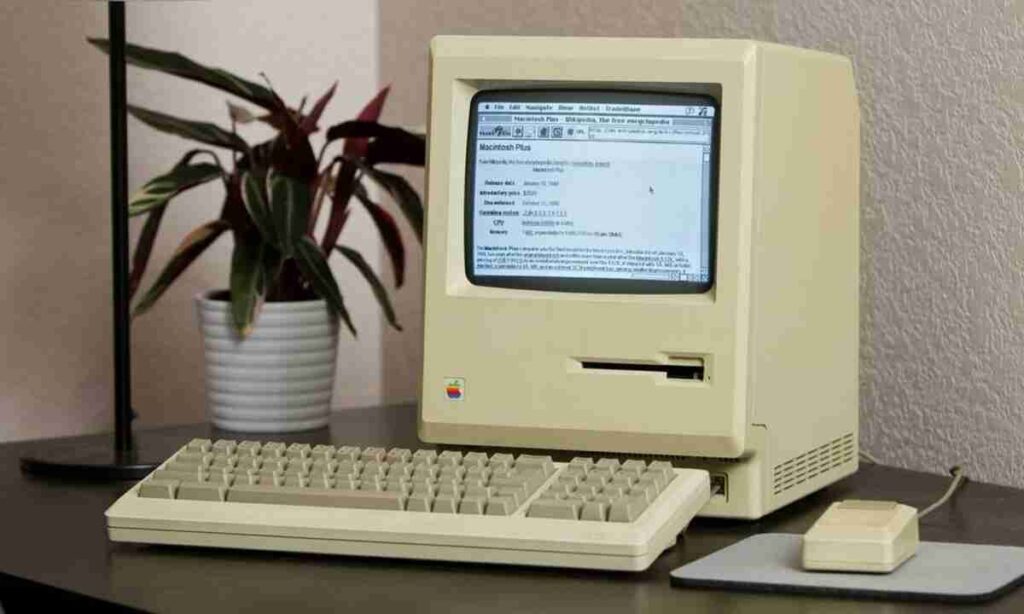

The most common screen technology used in old computers was the Cathode Ray Tube (CRT) monitor. CRT monitors were the dominant display technology for personal computers from the 1970s through the early 2000s before being gradually replaced by flat panel technologies like LCD and LED displays.

What Technology Is Used for Computer Monitors?

Modern computer monitors primarily use two display technologies:

- Liquid Crystal Display (LCD): LCD monitors use a liquid crystal solution sandwiched between two polarized glass panels. By applying an electric current to the liquid crystals the amount of light passing through can be controlled, creating an image.

- Light-Emitting Diode (LED): LED monitors are essentially a variation of LCD technology but they use light emitting diodes as the backlight source instead of traditional cold cathode fluorescent lamps (CCFLs).

How Have Monitors Evolved Over Time?

Computer monitors have undergone a significant evolution over the past decades driven by advancements in display technology and changing user demands. Here’s a brief overview of how monitors have evolved:

- Monochrome to Color: Early monitors could only display one color (usually green or amber) on a black background. As technology improved, color displays became more common first with limited color palettes and later with support for millions of colors.

- Increased Resolution: Display resolutions have steadily increased, from the low-resolution CGA and EGA modes to the high definition displays we have today.

- Flat-Panel Displays: CRT monitors were gradually replaced by flat panel technologies like LCD and LED, which offered slimmer profiles, lower power consumption and better image quality.

- Widescreen Aspect Ratios: As multimedia content became more prevalent, widescreen aspect ratios (like 16:9 and 16:10) became more popular, replacing the traditional 4:3 aspect ratio.

- Higher Refresh Rates: Modern monitors often support refresh rates of 120 Hz or higher, reducing motion blur and improving the perceived smoothness of motion.

- Advanced Features: Modern monitors often include features like high dynamic range (HDR) support, variable refresh rate technologies (e.g., FreeSync, G-Sync) and advanced color calibration options.

What Is the History of Monitor Technology?

The history of monitor technology can be traced back to the early days of computing when mechanical displays and oscilloscope displays were used to display information. As technology advanced, new display technologies emerged each with its own advantages and limitations.

Here’s a brief timeline of the history of monitor technology:

- 1950s: Mechanical displays and oscilloscope displays were used in early computers.

- 1960s: Teletype displays (teleprinters) became popular for displaying output from computers.

- 1970s: Cathode Ray Tube (CRT) monitors became the dominant display technology for personal computers.

- 1980s: Monochrome and color CRT monitors were introduced with graphics modes like CGA, EGA, and VGA.

- 1990s: Flat panel displays like LCD and plasma displays began to emerge offering slimmer profiles and lower power consumption.

- 2000s: LCD and LED displays rapidly replaced CRT monitors in the consumer market due to their advantages in size, weight and power consumption.

- 2010s: Advancements in display technology led to higher resolutions, wider color gamuts, HDR support and higher refresh rates.

FAQs

What Are the Two Most Common Types of Monitor Technologies?

Liquid Crystal Display (LCD)

Light Emitting Diode (LED)

When Was the First PC Monitor Made?

The first PC monitor was the IBM Monochrome Display Adapter (MDA), introduced in 1981 for use with the IBM PC. It was a monochrome CRT monitor that could display text and graphics with a resolution of 720×350 pixels.

What Was the First Computer Monitor?

While not a personal computer monitor, one of the earliest computer displays was the oscilloscope display used in the DEC PDP-1 computer, introduced in 1959. It used a cathode ray tube (CRT) to generate images on a phosphor coated screen.

Conclusion

The evolution of technology used in old PC monitors has been a remarkable journey, spanning from mechanical displays and oscilloscope displays to the high-resolution, color-rich displays we have today. Each technological advancement has brought improvements in resolution, color reproduction, and overall image quality, enabling more immersive and productive computing experiences. As display technology continues to evolve, we can expect even more exciting developments